Filippo Castorio is a Research Assistant at the Copenhagen Legal/Tech Lab, University of Copenhagen – Faculty of Law

1. Introduction: Generative Artificial Intelligence and its Impact on Reality

As technology becomes deeply intertwined with every facet of our lives, Generative Artificial Intelligence (GenAI) emerges onto the scene, bringing forth a mix of opportunities and complex hurdles. This blog post is a comprehensive exploration of GenAI and its significant implications for the creation and dissemination of information and its impact on shaping how we perceive and engage with news. It seeks to engage with the pressing legal and ethical concerns that arise from the use of GenAI in producing and spreading potentially misleading content. The emphasis will be on striking a balance between embracing GenAI for its advancements while also being vigilant, in safeguarding against its capacity to distort reality.

Last September, I ventured to Paris for the first time. Many had forewarned me of the potential disappointment awaiting at the sight of the Eiffel Tower. Yet, contrary to expectations, my first encounter with it at night left me utterly captivated.

That initial fascination, however, was overshadowed months later by an unsettling experience. Casually browsing X (what they now call Twitter), I came across a shockingly realistic video depicting the Eiffel Tower in flames. The imagery was so convincing that it took a moment to realise this wasn’t reality but a product of GenAI, a technology that’s becoming increasingly influential in creating highly realistic content.

2. The Rise of GenAI in Content Creation

Last year, “AI” was not just a term; it became the zeitgeist, leading the Collins Dictionary to crown it the word of the year. This accolade reflects the collective fascination and dialogue that AI sparked across various spectrums in 2023. Yet, if we were to distil this discourse to its essence, it’s clear that the real protagonist was indeed GenAI. Following the launch of technologies like ChatGPT, this field of AI wasn’t just a niche interest: it became a significant part of the lives of millions, directly or indirectly.

The World Economic Forum describes GenAI as a class of AI algorithms known for their ability to craft entirely new content, drawing from the rich tapestry of data they have been trained on. This technology spans the creation of images, text, and audio.

As we delve deeper into the capabilities of GenAI, it’s fascinating to see its transformative impact on content creation, challenging our perceptions of creativity and authorship.

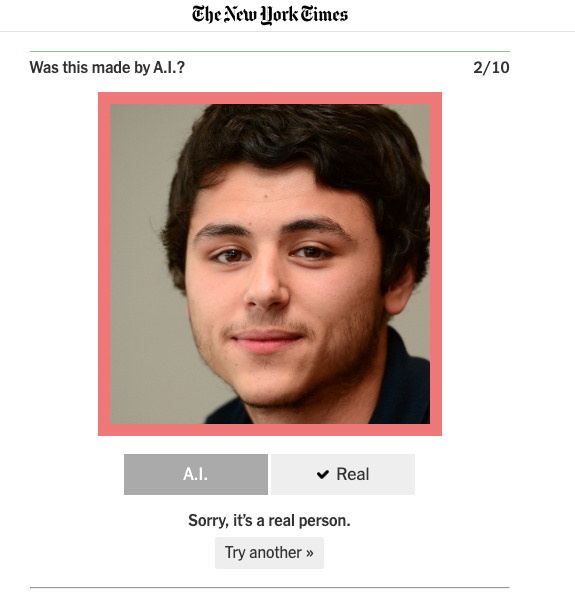

GenAI is revolutionising the way we approach content creation. This technology, encompassing chatbots, image generators, and voice cloners, is proficient at producing material that mirrors human craftsmanship. If you’re sceptical or simply curious about the capabilities of AI in generating realistic images, you might find it enlightening to take a test, designed by the New York Times. This test challenges you to distinguish between images of real people and those created by AI, offering a practical demonstration of how advanced and convincing AI-generated content has become.

For many sectors, the allure of GenAI primarily lies in its efficiency. Professionals and content creators stand to benefit significantly from these advancements. GenAI tools aid in numerous aspects, including idea generation, content planning and scheduling, search engine optimisation, and even research and editing tasks. By streamlining time-consuming tasks, these tools allow individuals to allocate their time and energy more effectively, potentially unlocking new levels of productivity and creativity.

3. The Double-Edged Sword of GenAI and The Proliferation of AI-Generated Misinformation

While it’s undeniable that this new technology presents a plethora of benefits, it’s equally crucial to acknowledge its drawbacks. As with any technology, there are negative aspects to consider. A significant concern, highlighted by sources including Medium, revolves around the “exploitation of weaknesses in the system to manipulate data”. This exploitation, as pointed out by Medium’s analysis, can lead to a phenomenon known as “AI package hallucination,” where the AI “generates convincing but false information”.

AI-generated images, including those depicting high-profile figures in unlikely scenarios, have circulated widely, misleading some and sparking debate among many. These developments highlight the growing challenge of discerning real from fake content online, raising concerns about the potential for misuse in spreading misinformation and deepening societal divides.

According to the Freedom of the Net 2023 Report released by Freedom House, GenAI “threatens to supercharge online disinformation campaigns”. Specifically, the report underlines that, over the past year, “the new technology was utilised in at least 16 countries to sow doubt, smear opponents, or influence public debate”.

The global discourse underscores the urgency of collaborative regulation for GenAI, spotlighted by Secretary-General Guterres‘ insights on AI’s double-edged nature. This pivotal moment calls for a united approach that highlights the importance of international cooperation in developing standards that ensure AI’s benefits are maximised while its risks are minimised. By prioritising human oversight, ethical principles, and inclusivity, the global community can navigate the challenges posed by GenAI.

The consequences of GenAI’s unchecked growth are already manifesting, particularly concerning news and information, where distinguishing fact from fiction becomes increasingly challenging.

Recent findings from NewsGuard have revealed a startling surge in the presence of “unreliable AI-generated news and information sites across the internet, numbering over 700. These platforms often bear nondescript, authoritative-sounding names like iBusiness Day, Ireland Top News, and Daily Time Update, which at first glance might suggest they are reputable news sources. However, these sites operate with minimal or no human oversight. These AI-driven websites produce a vast array of articles covering topics such as politics, technology, entertainment, and travel. The integrity of the information is questionable, as these articles sometimes propagate false claims. Misinformation includes incorrect reports about political figures, such as misleading statements, celebrity death hoaxes, invented incidents, and the presentation of old news as current events.

According to a recent report by The Washington Post, the reasons behind the proliferation of these AI-generated news sites are diverse. Some creators aim to influence political opinions or cause disruption, while others focus on generating polarising content to attract clicks and thereby increase advertising revenue.

Historically, technology has played a role in spreading misinformation. The potential use of AI-generated news by agencies to influence campaigns remains uncertain, yet it is a looming worry. According to Brewster, the scenario of politicians utilising such sites to publish favourable narratives about themselves or to disseminate false information about rivals seems plausible.

4. Navigating the Ethical Minefield of GenAI

As we examine the broader implications of GenAI, it’s essential to engage in a nuanced discourse that considers both its potential to innovate and its capacity to harm.

The discourse around the potential dangers of GenAI in the public sphere and its impact on democracy is complex. While concerns about AI’s role in spreading misinformation and undermining democratic processes are valid, there is also a risk that overly alarmist and speculative warnings could lead to unintended negative consequences.

GenAI poses significant questions about the principle of free speech. On one hand, AI has the potential to enrich public discourse, offering new tools for creativity and expression. On the other, it can be used to generate harmful content which can undermine democratic processes and individual reputations.

The United Nations underline that, amongst risk examples, GenAI’s capacity to create convincing yet false content can be misused to silence critics and vulnerable groups through targeted disinformation campaigns. Additionally, the overwhelming presence of AI-generated misinformation threatens to “overshadow factual information, undermining public trust in media and democratic processes”.

According to McCarthy-Jones, the indiscriminate application of free speech principles to AI technologies presents, however, a paradox. Imagine a scenario where our digital ecosystems become saturated with AI-generated content. This digital flooding, powered by AI, can potentially overwhelm our information spaces with a barrage of misinformation, effectively turning the internet into a battleground of “propaganda and untruth.” The challenge then becomes how to combat this tide of falsehood without veering into the realm of censorship, which poses its own set of ethical dilemmas.

The European Union’s draft AI act, aimed at regulating GenAI technologies, brings to the forefront the intricate balance between regulation and the promotion of free thought. An analysis by The Conversation underlines how the act mandates the disclosure of AI-generated content. This move could enhance our ability to evaluate such content critically, thereby supporting free thought. However, it also entertains the notion of allowing anonymous AI speech, which could minimise the pressure on AI developers to censor legal but controversial content, encouraging a more merit-based assessment of AI-generated information. The requirement for AI models to avoid generating illegal content, including hate speech, poses a risk of overly cautious content moderation.

As technology evolves, so too must our legal and ethical frameworks. Mc-Carthy explains that the concept of a “right to think with technology” underscores the importance of maintaining our ability to engage freely with AI and other digital tools in shaping our thoughts. Yet, the push for AI to be safe, aligned, and loyal raises questions about whether such constraints could impede our freedom of thought. As we navigate the complexities of AI development and its regulation, ensuring that these efforts do not infringe upon our fundamental right to freedom of thought will be crucial for preserving individual autonomy in the digital age.

5. From Blockchain to Digital Literacy: Strategies for Authenticating AI-Generated Information

In response to the challenges posed by GenAI, innovative solutions are emerging to authenticate AI-generated content, ensuring its integrity and reliability.

Because the quality of GenAI continues to evolve, distinguishing between real and AI-generated content becomes increasingly challenging.

The implementation of AI authentication techniques plays a crucial role in enhancing the integrity and reliability of AI-generated content. These methods are designed to mitigate risks associated with AI outputs by ensuring authenticity and accuracy. The process of content verification is instrumental in building a foundation of trust in GenAI technologies. It enables more constructive engagement with digital content and enriches online experiences.

Currently, various methods are in place to verify the authenticity of multimedia content, though each comes with its own set of limitations. One approach relies on verification by third parties or those close to the content source. Another strategy involves embedding watermarks or invisible markers within AI-generated content to signal its authenticity or lack thereof.

Blockchain technology occurs as a promising solution to these verification challenges. Any multimedia asset not listed within this repository could be deemed questionable or inauthentic by establishing a blockchain repository for verified and original content. This would allow the public to independently verify the authenticity of the content, treating any material not recorded on the blockchain with due scepticism. Blockchain technology’s inherent features — transparency, immutability, and accessibility — position it as an exceptionally suitable mechanism for online content authentication.

According to experts from Virginia Tech, “addressing this challenge [of misinformation] requires collaboration between human users and technology. While LLMs have contributed to the proliferation of fake news, they also present potential tools to detect and weed out misinformation. […] Users and news agencies bear the responsibility not to amplify or share false information, and additionally, users reporting potential misinformation will help refine AI-based detection tools, speeding the identification process”. And added, “There is a global recognition that something needs to be done. This is vitally important given that the U.S., U.K., India, and the E.U. all have important elections in 2024, which will likely see a host of disinformation posted throughout social media.”

Julia Feerrar, digital literacy educator at Virginia Tech, emphasises the importance of scrutinising the source of information to combat misinformation. Checking whether the content comes from a well-known, credible news organisation versus an unfamiliar source is key. She advises conducting a quick online search to verify the credibility of the source, suggesting the use of lateral reading. This method involves looking beyond the initial content to understand its origin and checking if other reliable media are covering the same story.

Feerrar also offers additional advice:

- Be wary of content that strongly appeals to emotions, and take a moment to reflect before reacting.

- Use “fact-check” in search queries to verify headlines and images.

- Simple, vague website names might indicate AI-generated news.

- AI-generated articles sometimes include error messages about violating usage policies, which less diligent sites might not remove.

- For AI-generated images, unnatural hands and feet, or an overly polished look, can serve as warning signs.

As we delve into the impact of GenAI, from its ability to impress us with creations to the challenges it poses, it’s evident that this technology is reshaping our perspective and communication. While GenAI simplifies tasks and unlocks avenues for creativity, we also grapple with the responsibility of combating misinformation and verifying online content. The transition from marvelling at AI-generated visuals to grasping its implications highlights the need for caution and reflection on how we harness this tool. Looking ahead, striking a balance involves leveraging the advantages of GenAI while collaboratively addressing its drawbacks to enhance rather than complicate our digital landscape.